Accessing Azure Data Lake Storage

In Parquet File Structure, we described the file systems on the Azure Data Lake Storage to upload your input data and download your results. This article demonstrates one way of accessing the Azure Data Lake Storage from an external application. In this example, we use Azure Storage Explorer, but we recommend that you use your preferred tool for this task.

Connect to file system

In this example, we will be using Azure Storage Explore to explore the file system as well as upload a dataset. This is a free tool that allows for interactions with the Azure Data Lake and is based on the azcopy functionality.

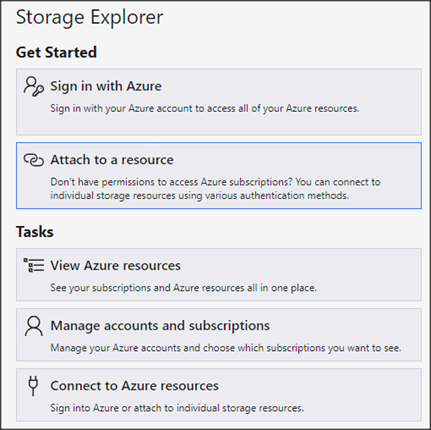

You can connect to a file system by clicking “Attach to a resource” in this Azure Store Explorer dialog:

Followed by ADLS Gen2 container or directory and Shared access signature URL (SAS). At this point, you need to provide a SAS URL. This is provided as URL and SAS on page SAS Tokens of the System Configurator for either the import or the export folder. You can just copy this string from the System Configurator to the Storage Explorer.

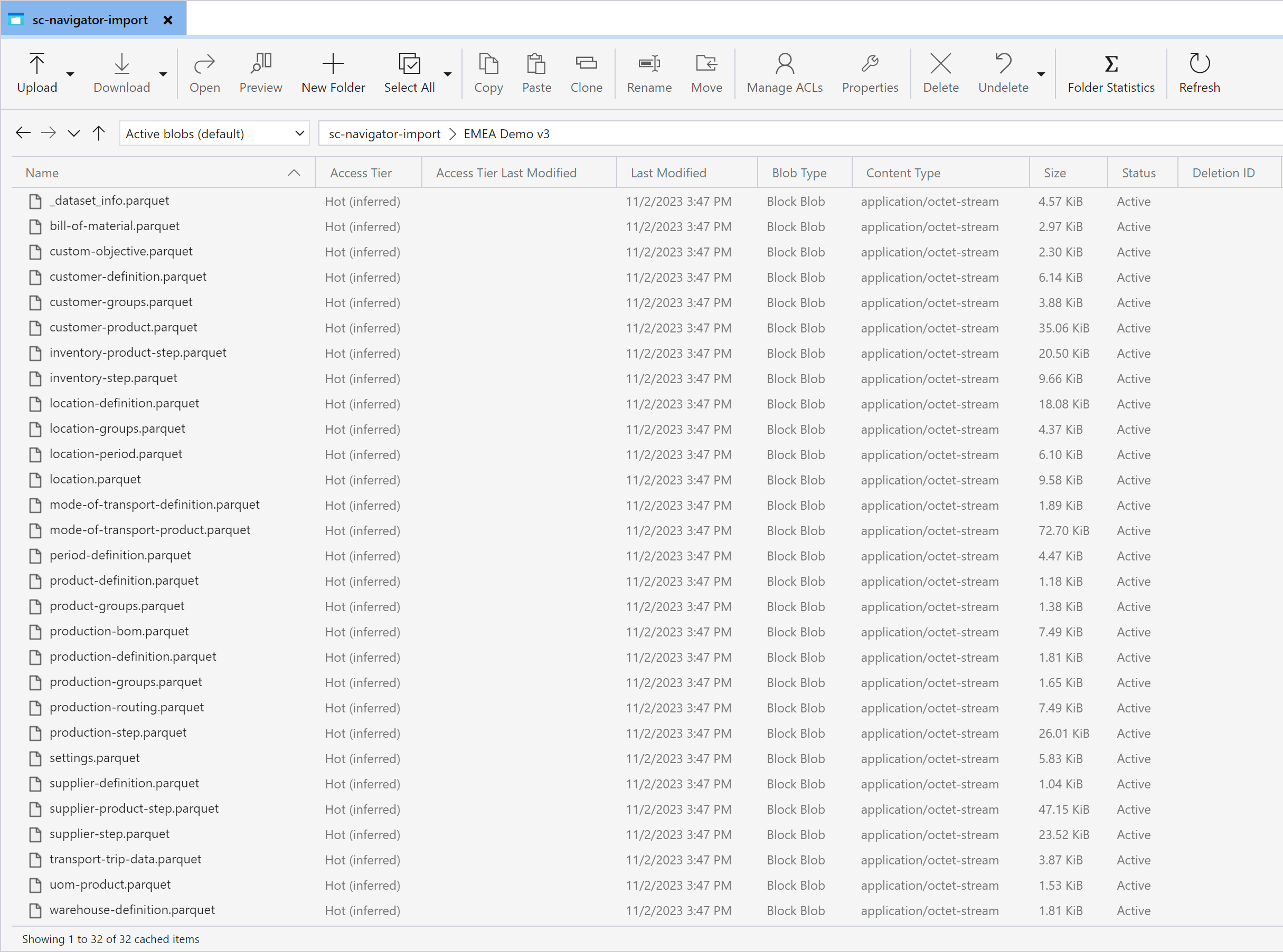

Once this is connected, you can see the overview of the available files and folders:

In here you can not only see the files, you can also preview the files. If you select a file and click on Preview it will show the content of the file, even for a parquet file.

Upload a folder to Azure Data Lake

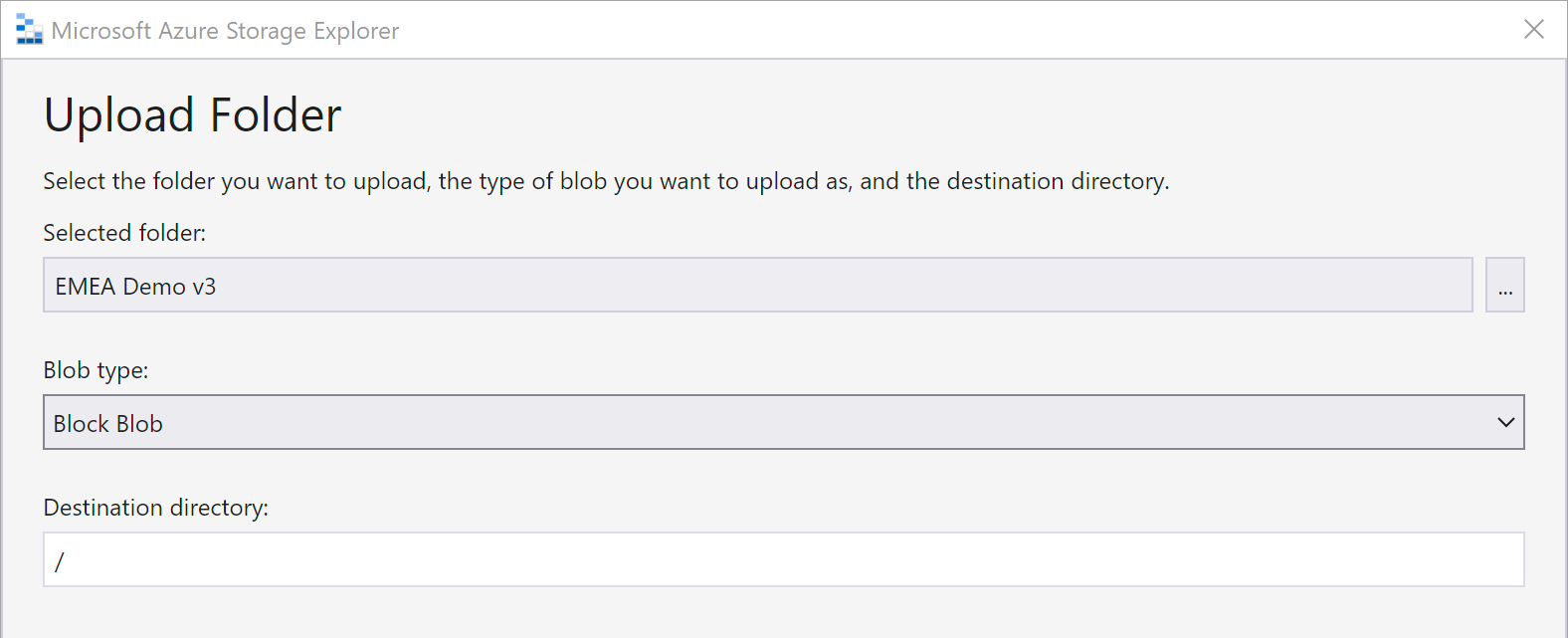

Once you have connected to the -import folder, you can upload a folder with all needed parquet files. You can do that by clicking Upload in the toolbar, followed by “Upload Folder”. In the upload folder dialog, you need to select the folder that you want to upload. In this figure, you can see that we are going to upload folder EMEA Demo v3 to the file system:

The next time you open the SC Navigator, you will see this dataset in the list with available datasets.

As was mentioned earlier, Storage Explorer uses azcopy to exchange files with the Azure data lake. In the activity log, you can actually see the azcopy that was executed:

$env:AZCOPY_CRED_TYPE = "Anonymous";

$env:AZCOPY_CONCURRENCY_VALUE = "AUTO";

./azcopy.exe copy "C:\Users\Peter\Desktop\EMEA Demo v3\" "https://[domain].blob.core.windows.net/sc-navigator-import/?sp=racwdl&se=2023-12-03T08%3A29%3A26Z&spr=https&sv=2022-11-02&sr=c&sig=[signature]" --overwrite=prompt --from-to=LocalBlob --blob-type BlockBlob --follow-symlinks --check-length=true --put-md5 --follow-symlinks --disable-auto-decoding=false --recursive --log-level=INFO;

$env:AZCOPY_CRED_TYPE = "";

$env:AZCOPY_CONCURRENCY_VALUE = "";

(The [domain] and [signature] were removed from this example for security reasons.)